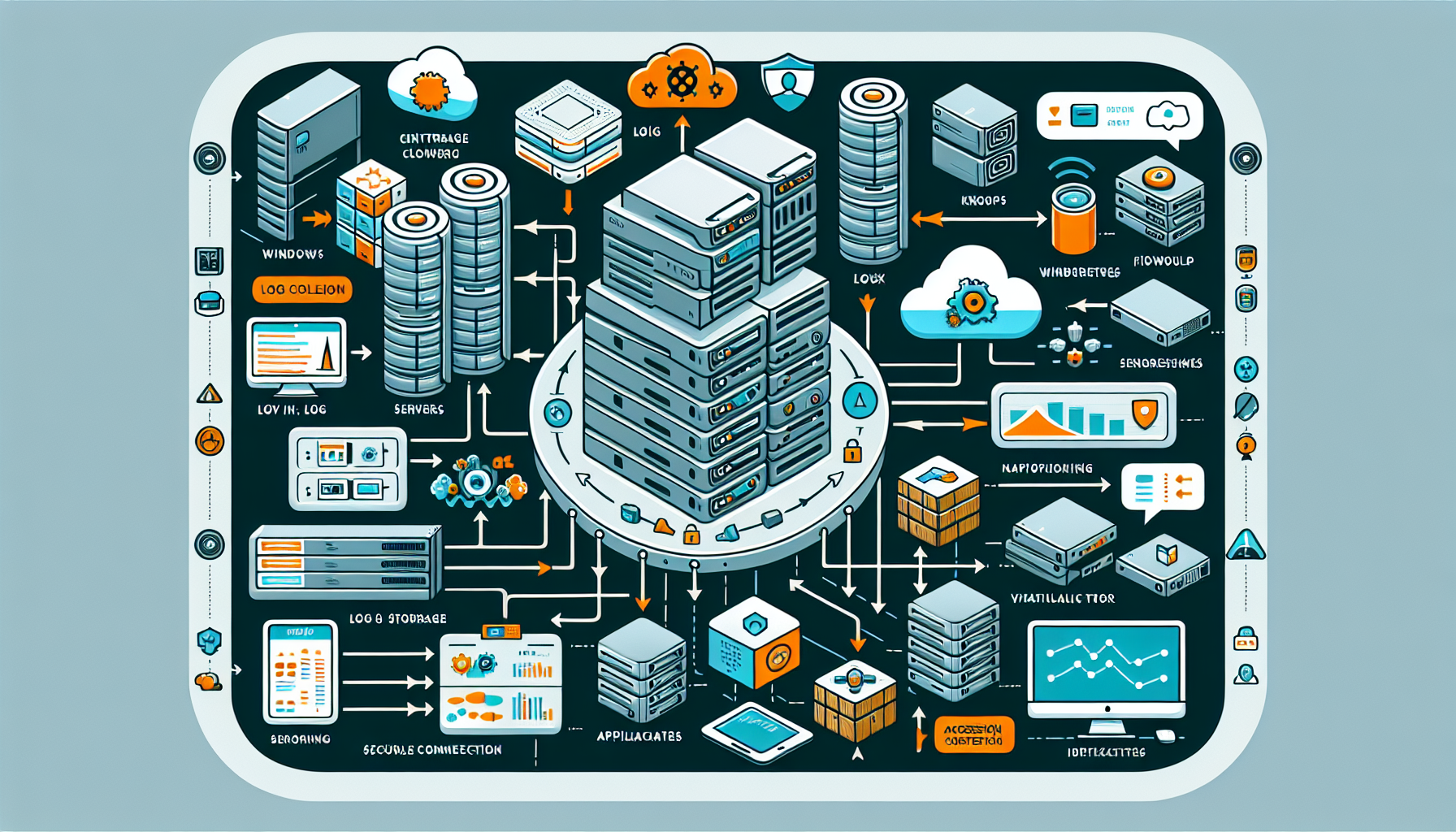

Setting up a centralized logging system for your IT infrastructure is critical for monitoring, troubleshooting, and securing your environment. Below is a step-by-step guide to building a centralized logging system:

1. Define the Scope and Requirements

- Identify the systems, applications, and services to log (e.g., servers, firewalls, applications, Kubernetes clusters, virtualization platforms, etc.).

- Determine the type of logs to collect (e.g., system logs, application logs, security/audit logs, storage logs, etc.).

- Decide on retention policies, storage capacity, and compliance requirements (e.g., GDPR, ISO 27001).

- Choose between on-premises or cloud-based logging solutions.

2. Choose a Centralized Logging Solution

- Select a centralized logging tool based on your needs:

- On-Premises Options:

- ELK/Elastic Stack (Elasticsearch, Logstash, Kibana)

- Graylog

- Splunk (enterprise version)

- Cloud-Based Options:

- AWS CloudWatch, Azure Monitor, or GCP Logging

- Datadog

- Loggly

- Splunk Cloud

- Kubernetes-Specific Tools:

- Fluentd/Fluent Bit

- Loki with Grafana

- Ensure the solution supports integration with your existing IT stack (Windows, Linux, Kubernetes, etc.).

3. Provision the Logging Server/Infrastructure

- For on-premises:

- Set up a dedicated server or virtual machine for the logging solution.

- Ensure sufficient compute, storage, and network capacity to handle log ingestion and retention.

- For high availability, deploy multiple nodes or clusters.

- For cloud-based solutions:

- Create the necessary cloud resources (storage buckets, compute instances, etc.).

4. Configure Log Collection Agents

- Install and configure log collection agents on all servers, devices, and services you want to monitor.

- Popular log collection tools:

- Linux:

rsyslogorsyslog-ng - Windows: Winlogbeat or NXLog

- Applications: Filebeat for log files, Metricbeat for performance metrics

- Kubernetes:

- Use Fluentd, Fluent Bit, or Logstash as sidecar containers or DaemonSets to collect pod logs.

- Integrate with Kubernetes’ native logging (e.g., kube-apiserver audit logs).

- Linux:

- Configure these agents to forward logs to the centralized logging server.

5. Set Up Log Ingestion and Parsing

- Configure your centralized logging solution to:

- Ingest logs from multiple sources.

- Parse logs into structured formats (e.g., JSON) for easier search and visualization.

- Use tools like Logstash, Fluentd, or custom scripts to transform and normalize logs.

6. Configure Indexing and Storage

- For Elasticsearch or similar tools:

- Define indices for different types of logs (e.g.,

system-logs-*,app-logs-*). - Set up index lifecycle management (ILM) to automatically delete or archive old logs.

- Define indices for different types of logs (e.g.,

- For cloud storage:

- Configure lifecycle rules (e.g., move to cold storage after 30 days).

- Ensure storage is scalable to handle growth.

7. Set Up Dashboards and Alerts

- Use visualization tools (e.g., Kibana, Grafana, or Splunk) to create dashboards for:

- System health monitoring.

- Security incidents (e.g., failed login attempts, suspicious activity).

- Application performance and errors.

- Configure alerts for critical events:

- Use tools like Prometheus Alertmanager, PagerDuty, or native alerting in Splunk/Kibana.

- Send notifications via email, Slack, or SMS.

8. Secure the Logging System

- Restrict access to the logging server (e.g., use firewalls, VPNs, or private network access).

- Implement role-based access control (RBAC) for log viewing and management.

- Encrypt logs in transit using TLS and at rest using storage-level encryption.

- Regularly patch and update the logging system to mitigate vulnerabilities.

9. Test and Validate

- Simulate log-generating events (e.g., login attempts, application errors) and verify they are collected and displayed correctly.

- Test alerting mechanisms to ensure timely notifications.

10. Monitor and Optimize

- Regularly review logging performance and storage usage.

- Optimize log ingestion pipelines to reduce latency.

- Tune alert thresholds to minimize false positives/negatives.

Example Architecture

If you’re using ELK/Elastic Stack:

1. Elasticsearch: Stores and indexes logs.

2. Logstash: Collects, transforms, and forwards logs.

3. Kibana: Visualizes logs and creates dashboards.

4. Beats: Lightweight agents (e.g., Filebeat, Metricbeat) to collect and ship logs.

For Kubernetes:

– Deploy Fluentd or Fluent Bit as a DaemonSet to collect logs from all nodes.

– Forward logs to Elasticsearch, Loki, or a cloud logging service.

By implementing a centralized logging system, you can efficiently monitor your IT infrastructure, detect anomalies, and improve overall system reliability and security.