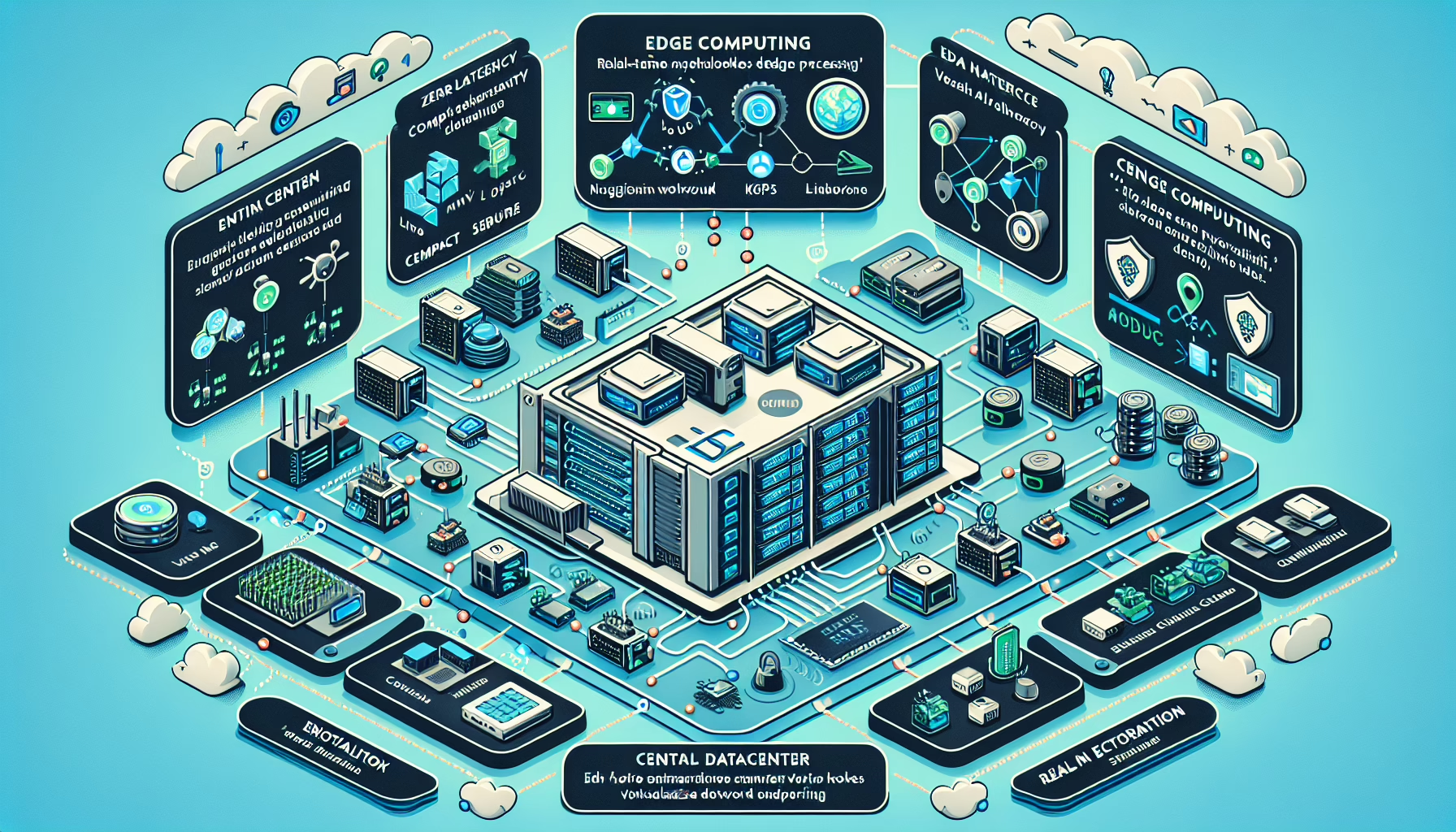

Implementing edge computing within a datacenter requires careful planning, proper infrastructure design, and integration of technologies that support edge processing. As an IT manager responsible for datacenter and IT infrastructure, here’s a step-by-step guide to implementing edge computing:

1. Define Use Cases and Requirements

- Identify workloads: Determine which workloads need low latency, high bandwidth, or real-time processing, such as AI inference, IoT, or video analytics.

- Clarify goals: Understand the business objectives, such as reducing latency, improving performance, or offloading central datacenter resources.

- Determine scalability: Assess how the edge solution will scale over time and accommodate future needs.

2. Plan the Edge Architecture

- Decentralized infrastructure: Design an architecture that distributes computing resources closer to where data is generated (e.g., near IoT devices or end users).

- Edge nodes: Deploy edge nodes (mini datacenters or microservers) that operate independently but can communicate with the main datacenter.

- Networking: Ensure robust connectivity between edge nodes and the central datacenter using SD-WAN or low-latency network links.

3. Select Hardware for Edge Computing

- Servers: Use compact, energy-efficient servers designed for edge environments. These should support high-performance computing for local processing.

- GPU acceleration: Equip edge nodes with GPU cards (e.g., NVIDIA A100, RTX series) for AI/ML tasks, video processing, and other computationally intensive workloads.

- Storage: Implement high-speed, durable storage solutions (e.g., NVMe or SSDs) for real-time data processing and local caching.

- Ruggedized hardware: For edge environments in harsh conditions, consider ruggedized devices designed for temperature, vibration, and dust resistance.

4. Deploy Virtualization and Containerization

- Virtual machines (VMs): Use hypervisors like VMware vSphere, Microsoft Hyper-V, or KVM to manage edge workloads.

- Container orchestration: Deploy Kubernetes or lightweight alternatives like K3s or MicroK8s to manage containerized applications at the edge.

- Automation: Use Infrastructure as Code (IaC) tools like Terraform or Ansible to automate edge node provisioning.

5. Implement AI and Machine Learning at the Edge

- Deploy AI frameworks that can run locally, such as TensorFlow Lite, PyTorch Mobile, or ONNX Runtime.

- Optimize models for edge devices using techniques like model quantization or pruning to reduce resource requirements.

- Leverage GPU-powered edge devices for inferencing tasks.

6. Ensure Reliable Networking

- Low-latency communication: Use SD-WAN or MPLS to ensure high-speed connectivity between the edge nodes and the datacenter.

- 5G and IoT: Utilize 5G networks for edge devices that require ultra-low latency and high bandwidth.

- Edge caching: Implement CDN-like caching at the edge to reduce network traffic and improve response times.

7. Focus on Security

- Data encryption: Encrypt data in transit and at rest on edge devices to protect sensitive information.

- Zero Trust architecture: Adopt a Zero Trust model for access control between the edge and the datacenter.

- Endpoint security: Implement strong endpoint protection on edge devices, such as firewalls, intrusion detection, and anti-malware solutions.

8. Monitoring and Management

- Centralized monitoring: Use tools like Prometheus, Grafana, or Datadog to monitor edge nodes and the central datacenter.

- Edge analytics: Implement real-time analytics platforms to visualize and act on data generated at the edge.

- Remote management: Use remote management tools like Redfish or IPMI to control edge devices without physical access.

9. Backup and Disaster Recovery

- Local backups: Store backups locally at the edge for faster recovery in case of failure.

- Replication: Replicate critical data back to the main datacenter or cloud for additional redundancy.

- Disaster recovery planning: Develop a DR strategy tailored to edge nodes, including failover scenarios and rapid restoration processes.

10. Test and Optimize

- Pilot projects: Start with small-scale deployments and refine the edge computing model based on performance metrics.

- Stress tests: Evaluate edge nodes under high workloads to identify bottlenecks and optimize resource allocation.

- Continuous improvement: Regularly update edge infrastructure with newer hardware, software patches, and performance enhancements.

Tools and Technologies to Consider:

- Hardware: Dell EMC PowerEdge XE servers, NVIDIA Jetson, HPE Edgeline servers.

- Software: VMware Tanzu, OpenShift, K3s, Kubernetes.

- Networking: Cisco SD-WAN, Juniper Contrail, Silver Peak edge solutions.

- AI frameworks: TensorFlow Lite, ONNX Runtime, NVIDIA Triton Inference Server.

Challenges:

- Resource constraints: Edge devices may have limited resources compared to a central datacenter.

- Scalability: Managing hundreds or thousands of edge devices requires scalable orchestration tools.

- Security: Edge locations can be harder to secure than a centralized datacenter.

By following this approach, you can successfully integrate edge computing into your datacenter operations, enabling faster decision-making, reduced latency, and more efficient processing of data closer to its source.