Netbackup kullanmaya başladım başlayalı bir yarıda kesilme problemidir, gidiyordu. Açılan caseler, yapılan çalışmalar fayda vermiyordu ki en son yurt dışından gelen mühendis kök sebebi tam olarak bulamasada bir çözüme kavuşturdu. Ben çektim, siz çekmeyin. Buyrun çözüm:

Backline Root Cause Analysis

|

RCA Author: |

John Peters | |||

|

Error Code/Message |

Error 13 | |||

|

Diagnostic Steps |

Verbose logging, TCP dumps, Media,Master,client I/O parameter adjustments | |||

|

Related E-Tracks

|

3445293 | |||

|

Problem Description |

MS SQL backups are failing randomly if MSDP is used. | |||

|

Cause |

It is believed that several adjustments that have been done on Master and Media Servers have resolved the issues . Engineering believes that both Network and IO tuning ended the congestion, hence the bottleneck, resulting NBU to successfully complete backups. | |||

|

Solution |

Master, Media Server and client side, I/O parameter adjustments. | |||

| Resolution Criteria | —- | |||

| Description Resolution (if Criteria is not met) | In our attempts to determine the root cause of the status 13 failureswhen backing up SQL databases to MSDP using media side deduplication,

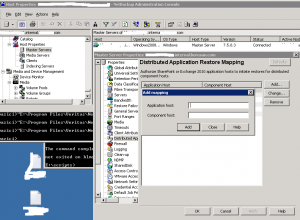

From the initial logs we could see that after some time, the dbclient process attempted to send data to the appliance, ie : 16:07:53.335 [17300.26824] <4> VxBSASendData: INF – entering SendData. 16:07:53.335 [17300.26824] <4> dbc_put: INF – sending 65536 bytes 16:07:53.335 [17300.26824] <2> writeToServer: bytesToSend = 65536 bytes 16:07:53.335 [17300.26824] <2> writeToServer: start send — try=1 16:08:12.224 [17300.26824] <16> writeToServer: ERR – send() to server on socket failed: This message occurs a as a consequence of the OS library call send() returning a non-zero status attempting to send data to a file descriptor ( socket ) that is associated with a network connection. When this failure occurs, dbclient sends a failure status to the media server and bpbrm terminates the job resulting in the status 13. The OS library call to recv() data on the media server does not receive any data or indication from other end of the network connection at the time of the failure which indicates that the TCP stacks on the two hosts are not in agreement on the state of the connection. What we needed to find out was if the problem was occurring in the TCP stack on the client or appliance side of that connection. In order to understand this, it was felt necessary to look at the problem from a TCP level, we hoped that this would show if data was flowing or if there was a plugin ingest issue. For this to happen, we would need to perform network packet capture between the hosts, as well as performing strace on bptm processes together with full debug logging enabled. This approach poses a difficult problem based on the intermittent nature of the failures and the indeterminate time taken for jobs to fail. The resulting logs would be large and the tcpdump / straces enormous. We attempted to work around this by rotating the tcp capture logs and terminating the traces as soon as the job failed in an attempt to capture the critical information. However, when we set this test up, the additional load imposed by the tracing and logging impacted the appliance to such a degree that the backup eventually failed with a 13, unfortunately we do not feel that this failure was the same issue seen for earlier failures. In this case when the job was repeated with tracing turned off it was successful. In order to try and prevent the issue occuring we made some tuning changes to components we felt were potentially the source of the problem, specifically : 1) Increased the TCP retransmission settings on the client to 15 ( http://support.microsoft.com/default.aspx?scid=kb;en-us;170359) 2) Implemented following settings on the appliance : echo 128 > /usr/openv/netbackup/db/config/CD_NUMBER_DATA_BUFFERS echo 524288 > /usr/openv/netbackup/db/config/CD_SIZE_DATA_BUFFERS echo 180 > /usr/openv/netbackup/db/config/CD_UPDATE_INTERVAL touch /usr/openv/netbackup/db/config/CD_WHOLE_IMAGE_COPY echo 0 > /usr/openv/netbackup/NET_BUFFER_SZ echo 0 > /usr/openv/netbackup/NET_BUFFER_REST echo 256 > /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS echo 512 > /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS_DISK echo 16 > /usr/openv/netbackup/db/config/NUMBER_DATA_BUFFERS_FT echo 1500 > /usr/openv/netbackup/db/config/OS_CD_BUSY_RETRY_LIMIT echo 262144 > /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS echo 1048576 > /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS_DISK echo 262144 > /usr/openv/netbackup/db/config/SIZE_DATA_BUFFERS_FT echo 3600 > /usr/openv/netbackup/db/config/DPS_PROXYDEFAULTRECVTMO echo 3600 > /usr/openv/netbackup/db/config/DPS_PROXYDEFAULTSENDTMO touch /usr/openv/netbackup/db/config/DPS_PROXYNOEXPIRE echo 5000 > /usr/openv/netbackup/db/config/MAX_FILES_PER_ADD 3) Set /usr/openv/pdde/pdcr/etc/contentrouter.cfg PrefetchThreadNum=16 MaxNumCaches=1024 ReadBufferSize=65536 WorkerThreads=128 4) Set /usr/openv/lib/ost-plugins/pd.conf PREFETCH_SIZE = 67108864 CR_STATS_TIMER = 300 5) Modified the “Client Communication Buffer size” Host properties –> Client Settings –> Communication Buffer size set to 256kb 6) MSSQL script was modified : BUFFERCOUNT to 2 STRIPE to 4 7) Additionally we would always advise to check the “resolution” section of this technote http://www.symantec.com/docs/TECH37372. |

|||