Configuring NVIDIA GPU drivers for deep learning workloads on Linux involves several steps to ensure your system is ready for high-performance computations. Here’s a detailed guide:

1. Check GPU Compatibility

- Verify your NVIDIA GPU model is supported for deep learning workloads by checking compatibility with CUDA and cuDNN libraries on the NVIDIA website.

2. Prepare Your Linux Environment

- Update your Linux system to the latest version for compatibility:

sudo apt update && sudo apt upgrade - Make sure you have the necessary developer tools installed:

sudo apt install build-essential dkms

3. Install NVIDIA GPU Drivers

-

Check for the latest NVIDIA drivers:

Visit the NVIDIA Drivers Download page and identify the correct driver version for your GPU and Linux distribution. -

Remove existing drivers (if needed):

sudo apt remove --purge nvidia-* -

Add the NVIDIA repository (Ubuntu/Debian):

sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt update -

Install the recommended driver:

ubuntu-drivers devices

sudo apt install nvidia-driver-<version>

Replace<version>with the recommended or latest driver version. -

Verify driver installation:

nvidia-smi

This should display information about your GPU and the installed driver.

4. Install CUDA Toolkit

- Download the CUDA toolkit installer from the NVIDIA CUDA Toolkit page.

-

Follow the installation instructions for your Linux distribution.

-

Add CUDA to your path:

export PATH=/usr/local/cuda/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH - Verify CUDA installation:

nvcc --version

5. Install cuDNN

- Download cuDNN from the NVIDIA cuDNN page (requires registration).

- Extract the downloaded archive and copy the files to the appropriate CUDA directory:

sudo cp cuda/include/cudnn*.h /usr/local/cuda/include

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn*.h /usr/local/cuda/lib64/libcudnn*

6. Install Deep Learning Frameworks

- Install Python and pip:

sudo apt install python3 python3-pip - Install frameworks like TensorFlow or PyTorch with GPU support:

- TensorFlow:

pip install tensorflow - PyTorch:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu<version>

Replace<version>with your CUDA version (e.g.,cu118for CUDA 11.8).

- TensorFlow:

7. Test Your Setup

- Run a quick test to ensure the GPU is being utilized:

- TensorFlow:

python

import tensorflow as tf

print("GPUs Available: ", len(tf.config.list_physical_devices('GPU'))) - PyTorch:

python

import torch

print("CUDA Available: ", torch.cuda.is_available())

print("GPU Name: ", torch.cuda.get_device_name(0))

- TensorFlow:

8. Monitor GPU Usage

- Use

nvidia-smito monitor GPU utilization:

nvidia-smi

9. Optional: Install Docker for GPU Workloads

- Install Docker and the NVIDIA Container Toolkit for GPU-accelerated containers:

sudo apt install docker.io

sudo docker run --gpus all nvidia/cuda:11.8-base nvidia-smi

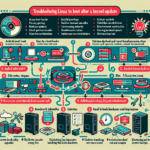

10. Troubleshooting

- Ensure secure boot is disabled in BIOS if the drivers fail to load.

- Check kernel compatibility with the driver version.

- Verify your GPU is not being used by another application.

By following these steps, you should have a fully configured NVIDIA GPU environment tailored for deep learning workloads on Linux.