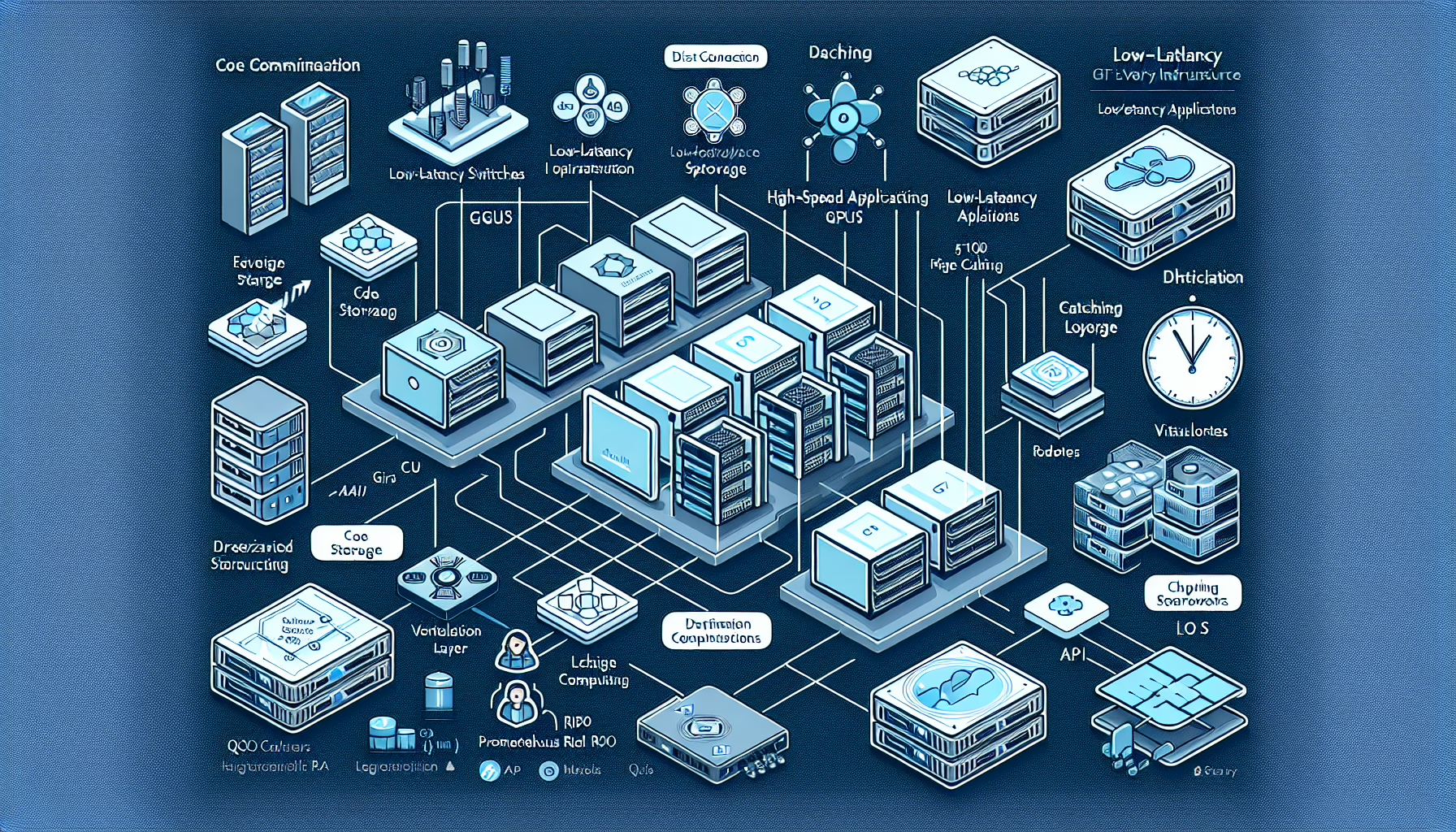

Optimizing IT infrastructure for low-latency applications requires a strategic approach across hardware, software, networking, and system design. Here are the key steps to ensure your infrastructure meets the demands of low-latency applications:

1. Network Optimization

- Minimize hops: Reduce the number of network hops between components by simplifying network architecture.

- Use low-latency switches and routers: Deploy high-performance networking hardware designed for low-latency environments.

- Leverage high-speed connections: Use technologies like fiber optics, 10Gbps, 25Gbps, or even 100Gbps Ethernet connections.

- Enable jumbo frames: Configure jumbo frames for larger packet sizes to reduce overhead for high-throughput applications.

- Reduce network congestion: Use Quality of Service (QoS) settings to prioritize latency-sensitive traffic.

- Use direct connections: For critical applications, establish direct server-to-server connections to bypass intermediaries.

2. Storage Optimization

- Deploy NVMe drives: Non-Volatile Memory Express (NVMe) SSDs provide ultra-low latency compared to traditional spinning drives or even SATA SSDs.

- Optimize RAID configurations: Use RAID setups that balance redundancy and performance, such as RAID 10.

- Implement caching: Use in-memory caching tools like Redis or Memcached to reduce read/write latency.

- Reduce I/O bottlenecks: Ensure proper sizing of storage controllers and adequate disk I/O capacity.

- Use tiered storage: Store frequently accessed data on faster storage tiers and less-accessed data on slower ones.

3. Server Optimization

- Use high-performance CPUs: Deploy servers with CPUs optimized for single-thread performance if the application is single-threaded or latency-sensitive.

- Maximize RAM: Ensure applications have sufficient RAM to avoid swapping to disk.

- Enable NUMA-aware processing: Configure systems to ensure that memory and processors are optimally paired for faster access.

- Minimize background processes: Disable unnecessary services and processes that consume CPU cycles.

4. Virtualization and Containerization

- Optimize hypervisor settings: Use performance-tuned hypervisors like VMware ESXi or KVM with minimal overhead.

- Deploy Kubernetes effectively: For containerized workloads, ensure Kubernetes is configured to minimize pod-to-pod communication latency.

- Use bare-metal servers: For applications that demand the lowest latency, avoid virtualization and deploy directly on physical servers.

5. GPU Optimization

- Use specialized GPUs: For AI and machine learning workloads, use GPUs like NVIDIA A100, H100, or AMD Instinct MI200, which are designed for low-latency computation.

- Enable GPUDirect RDMA: For applications using GPUs, use NVIDIA GPUDirect RDMA to allow GPUs to communicate directly with network devices, bypassing the CPU.

- Optimize GPU memory: Ensure GPU memory is sufficient for workloads to avoid expensive memory transfers.

6. Application Optimization

- Code optimization: Profile and optimize your application code to remove bottlenecks, improve threading, and reduce computational overhead.

- Minimize API calls: Reduce the number of external API calls or optimize them for faster responses.

- Use edge computing: Deploy applications closer to end-users or data sources to minimize geographic latency.

7. Monitoring and Troubleshooting

- Use APM tools: Application Performance Monitoring tools like Datadog, Dynatrace, or New Relic can help identify latency issues.

- Implement real-time monitoring: Use tools like Prometheus and Grafana for real-time infrastructure monitoring.

- Analyze bottlenecks: Continuously analyze traffic patterns and identify components causing delays.

- Stress test: Perform regular load testing to understand infrastructure limits and identify potential latency issues.

8. Optimize Kubernetes for Low-Latency Apps

- Node affinity: Use node affinity rules to place latency-sensitive workloads on specific nodes.

- Pod networking: Implement CNI plugins that prioritize low-latency networking, such as Calico or Cilium.

- Horizontal Pod Autoscaling: Ensure pods scale based on real-time metrics to avoid resource starvation.

9. Reduce Latency in Backup and Storage

- Use snapshots efficiently: Optimize snapshot schedules to avoid performance degradation during backups.

- Leverage backup tiering: Store backups on high-speed storage for quick recovery if needed.

10. General Best Practices

- Deploy edge computing: Move workloads closer to the data source or end-user to reduce geographic latency.

- Minimize virtualization overhead: For extremely latency-sensitive applications, consider bare-metal deployments.

- Optimize power and cooling: Ensure consistent power and cooling to prevent hardware throttling due to overheating.

- Update firmware and drivers: Regularly update hardware firmware and drivers to gain performance improvements.

By continuously monitoring and tuning your IT infrastructure, you can ensure low-latency performance for critical applications. Regular reviews of workloads, hardware performance, and system configurations will help identify new opportunities for optimization.