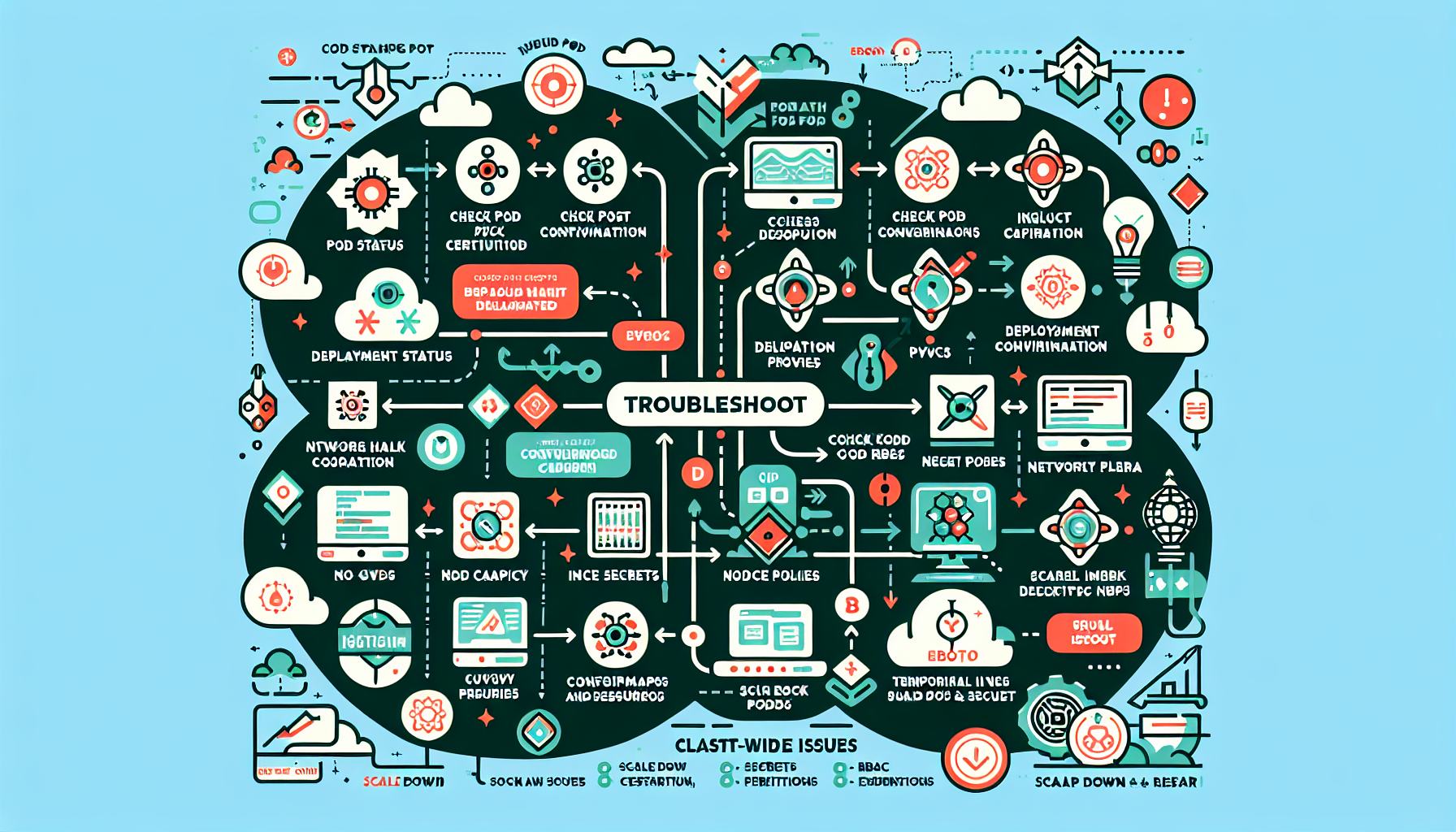

Troubleshooting pod deployment issues in Kubernetes can be a complex task, but by following a systematic approach, you can pinpoint and resolve the problem efficiently. Here’s a step-by-step guide:

1. Check the Pod Status

- Use the

kubectl get podscommand to check the status of the pod:

bash

kubectl get pods -n <namespace> - Possible statuses:

Pending: Pod is waiting for resources or scheduling.Running: Pod is running, but it might still have issues.CrashLoopBackOff: Pod is repeatedly crashing and restarting.Error: Pod encountered an error during startup.

2. Inspect Events for Errors

- Check for events in the namespace for clues about scheduling, resource limits, or other errors:

bash

kubectl describe pod <pod-name> -n <namespace> - Look for messages such as:

- “FailedScheduling”: Indicates node affinity, taints, or lack of resources.

- “FailedMount”: Indicates issues with persistent volumes or storage.

- “ImagePullBackOff”: Indicates a problem pulling the container image.

3. Verify Pod Logs

- Check the logs of the failing container to identify runtime errors:

bash

kubectl logs <pod-name> -n <namespace> - If the pod has multiple containers, specify the container name:

bash

kubectl logs <pod-name> -n <namespace> -c <container-name> - Look for stack traces, configuration issues, or application-related errors.

4. Check Deployment Configuration

- Review the deployment YAML file for misconfigurations:

bash

kubectl get deployment <deployment-name> -n <namespace> -o yaml - Common issues:

- Missing or incorrect environment variables.

- Incorrect image name or tag.

- Missing volume mounts or incorrect mount paths.

- Resource requests/limits that exceed available node capacity.

5. Check Node Capacity and Scheduling

- Ensure the nodes have sufficient resources (CPU, memory, GPU, etc.) to schedule the pod:

bash

kubectl describe nodes - Look for:

- Insufficient CPU or memory.

- Taints and tolerations blocking scheduling.

- Node affinity/anti-affinity rules preventing placement.

6. Inspect Persistent Volume Claims (PVC)

- If the pod uses storage, verify the persistent volume claims:

bash

kubectl get pvc -n <namespace>

kubectl describe pvc <pvc-name> -n <namespace> - Common issues:

- PVC is not bound to a Persistent Volume (PV).

- Storage class misconfiguration.

- Insufficient storage capacity.

7. Check Image Configuration

- Verify that the container image is correct and accessible:

- Check if the image name and tag are correct.

- Ensure the image exists in the container registry.

- If the image is private, ensure the proper image pull secret is configured:

bash

kubectl get secret -n <namespace>

8. Inspect Network Policies

- If the pod relies on network communication, verify that NetworkPolicies are not blocking traffic:

bash

kubectl get networkpolicy -n <namespace> - Ensure that the required ports and communication paths are allowed.

9. Examine Pod Health Probes

- Check the readiness and liveness probes in the deployment configuration:

yaml

livenessProbe:

httpGet:

path: /healthz

port: 8080

readinessProbe:

httpGet:

path: /ready

port: 8080 - Misconfigured probes can cause pods to restart or fail to become ready.

10. Validate ConfigMaps and Secrets

- Ensure that ConfigMaps and Secrets referenced in the pod are correctly defined:

bash

kubectl get configmap -n <namespace>

kubectl get secret -n <namespace> - Ensure that the pod has the necessary permissions to access them.

11. Check Cluster-Wide Issues

- Investigate if cluster-wide issues are affecting pod deployment:

- Review the status of key cluster components:

bash

kubectl get nodes

kubectl get cs (componentstatuses) - Check if there are resource constraints or node failures.

12. Debug with Temporary Pods

- Use a temporary pod to debug the environment:

bash

kubectl run debug-pod --rm -i --tty --image=busybox -- sh

13. Review Kubernetes Logs

- Examine logs from the kubelet or other control plane components for issues:

- On the node, check

kubeletlogs for scheduling or runtime errors. - Use your monitoring/logging tools (e.g., ELK, Prometheus, Grafana) to investigate deeper.

14. Scale Down and Restart

- If the deployment has multiple replicas, try scaling down to zero and then scaling up:

bash

kubectl scale deployment <deployment-name> --replicas=0 -n <namespace>

kubectl scale deployment <deployment-name> --replicas=<desired-number> -n <namespace>

15. Leverage Debugging Tools

- Use Kubernetes-native debugging tools:

kubectl execto inspect containers interactively:

bash

kubectl exec -it <pod-name> -n <namespace> -- /bin/bashkubectl port-forwardto test application endpoints locally:

bash

kubectl port-forward <pod-name> <local-port>:<container-port> -n <namespace>

16. Review RBAC and Permissions

- Ensure that the pod has the necessary permissions if it interacts with the API or other cluster resources:

bash

kubectl auth can-i <verb> <resource> --as=<service-account>

17. Consult Logs from External Systems

- If the pod interacts with external systems (e.g., databases, APIs), check for connectivity and authentication issues.

By systematically going through these steps, you should be able to identify and resolve the root cause of your pod deployment issues in Kubernetes. If the issue persists, consider reaching out to community forums or support channels with specific details for further assistance.

How do I troubleshoot pod deployment issues in Kubernetes?