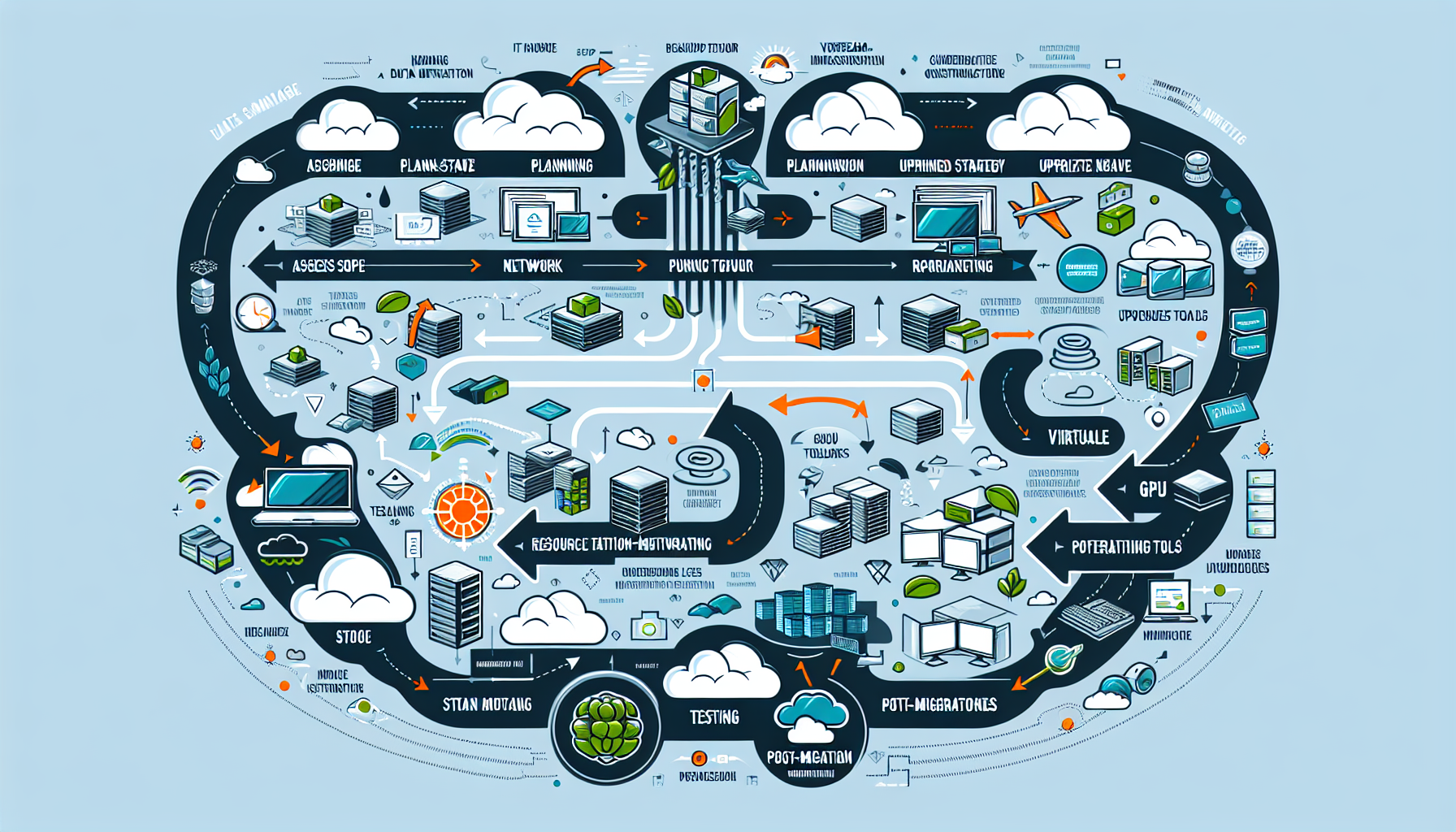

Optimizing IT infrastructure for large-scale data migrations is a complex task that requires careful planning, resource allocation, and testing to ensure efficiency, reliability, and minimal downtime. Here are the steps and best practices to optimize your IT infrastructure for such migrations:

1. Assess the Scope of Migration

- Understand the Data: Analyze the size, type, structure, and sensitivity of the data to be migrated.

- Source and Destination: Evaluate the current infrastructure (source) and destination (whether it’s on-premises, cloud, or hybrid).

- Dependencies: Identify dependencies on applications, databases, and services that are tied to the data.

2. Plan and Document the Migration Strategy

- Set Objectives: Define clear goals (e.g., performance improvement, cost reduction, or scalability).

- Migration Type: Choose the migration type (e.g., lift-and-shift, re-platforming, or modernization).

- Timeline: Create a migration schedule with milestones to minimize disruptions.

- Risk Assessment: Identify potential risks and plan contingencies (e.g., rollback procedures).

3. Upgrade Network and Bandwidth

- Network Optimization: Ensure the network infrastructure can handle large-scale data transfers without bottlenecks. Upgrade switches, routers, and firewalls if needed.

- Bandwidth Allocation: Allocate sufficient bandwidth for data migration processes while ensuring regular operations are not impacted.

- WAN Acceleration Tools: Use tools like Riverbed or Citrix to optimize data transfers over wide-area networks.

4. Leverage Storage Solutions

- High-Performance Storage: Use NVMe or SSD-based storage for staging and temporary storage during migration to speed up data transfers.

- Data Deduplication: Reduce redundant data to minimize the amount of data transferred.

- Compression: Compress data before transferring to reduce bandwidth consumption.

5. Virtualization and Containers

- Virtual Machines: Use virtual machines for staging environments or testing migration processes without impacting production systems.

- Containers: For applications tied to the data, consider containerizing workloads using Kubernetes or Docker for portability and faster migration.

6. Optimize Backup and Recovery

- Pre-Migration Backup: Create a comprehensive backup of all data before starting migration to safeguard against data loss.

- Replication: Use tools like Veeam, Commvault, or Dell EMC to replicate data to the destination in real-time or near-real-time.

- Disaster Recovery Plan: Ensure the DR plan is updated and tested before starting migration.

7. Utilize Automation Tools

- Migration Tools: Use automated tools like AWS DataSync, Azure Migrate, Google Transfer Appliance, or rsync for efficient data transfers.

- Scripting: Develop custom scripts to automate repetitive tasks and monitor progress.

- Orchestration Platforms: Leverage orchestration tools like Ansible or Terraform for managing infrastructure changes during migration.

8. Monitor Resources

- Real-Time Monitoring: Use monitoring tools like Prometheus, Grafana, SolarWinds, or Nagios to track server, storage, and network performance during migration.

- Resource Scalability: Ensure compute resources (CPU, RAM, GPU if applicable) can scale dynamically to handle peak loads.

9. Optimize Kubernetes for Data Migration

- Persistent Volumes: Configure persistent volumes in Kubernetes to ensure data integrity during migration.

- Storage Classes: Use dynamic storage provisioning with appropriate storage classes for scalable and efficient data handling.

- Node Affinity: Optimize placement of Kubernetes pods to ensure they are close to the data source/destination.

10. GPU Workloads for AI/ML Data

- GPU Optimization: If migrating data tied to AI/ML workloads, ensure GPU-enabled servers are properly configured at the destination.

- Drivers and Frameworks: Validate compatibility of GPU drivers (e.g., NVIDIA CUDA) and AI/ML frameworks (TensorFlow, PyTorch) post-migration.

- Data Partitioning: Divide large datasets into smaller chunks to ensure efficient GPU processing after migration.

11. Test Before Migration

- Pilot Migration: Perform a test migration on a subset of data to validate the process and infrastructure.

- Performance Testing: Measure throughput and latency of data transfers during testing.

- Rollback Validation: Verify that rollback procedures work reliably in case of failure.

12. Post-Migration Optimization

- Validation: Verify that all data has been successfully migrated and applications are functioning properly.

- Performance Tuning: Optimize storage, network, and compute resources at the destination.

- Documentation: Update documentation with new configurations and lessons learned.

Tools to Consider

- Storage Migration: VMware vSphere Storage vMotion, NetApp SnapMirror, Dell EMC PowerPath.

- Backup/Replication: Veeam Backup & Replication, Commvault, Rubrik.

- Cloud Migration: AWS Snowball, Azure Data Box, Google Transfer Service.

- Monitoring: Prometheus + Grafana, SolarWinds, Datadog, Zabbix.

Key Takeaways

- Plan meticulously to avoid downtime or data loss.

- Use high-performance storage and network solutions to minimize bottlenecks.

- Leverage automation and orchestration tools for efficiency.

- Test extensively to ensure reliability and minimize risks.

Let me know if you need further clarification or help with specific tools or technologies!

How do I optimize IT infrastructure for large-scale data migrations?