Configuring High-Availability Clusters for Databases: A Step-by-Step Enterprise Guide

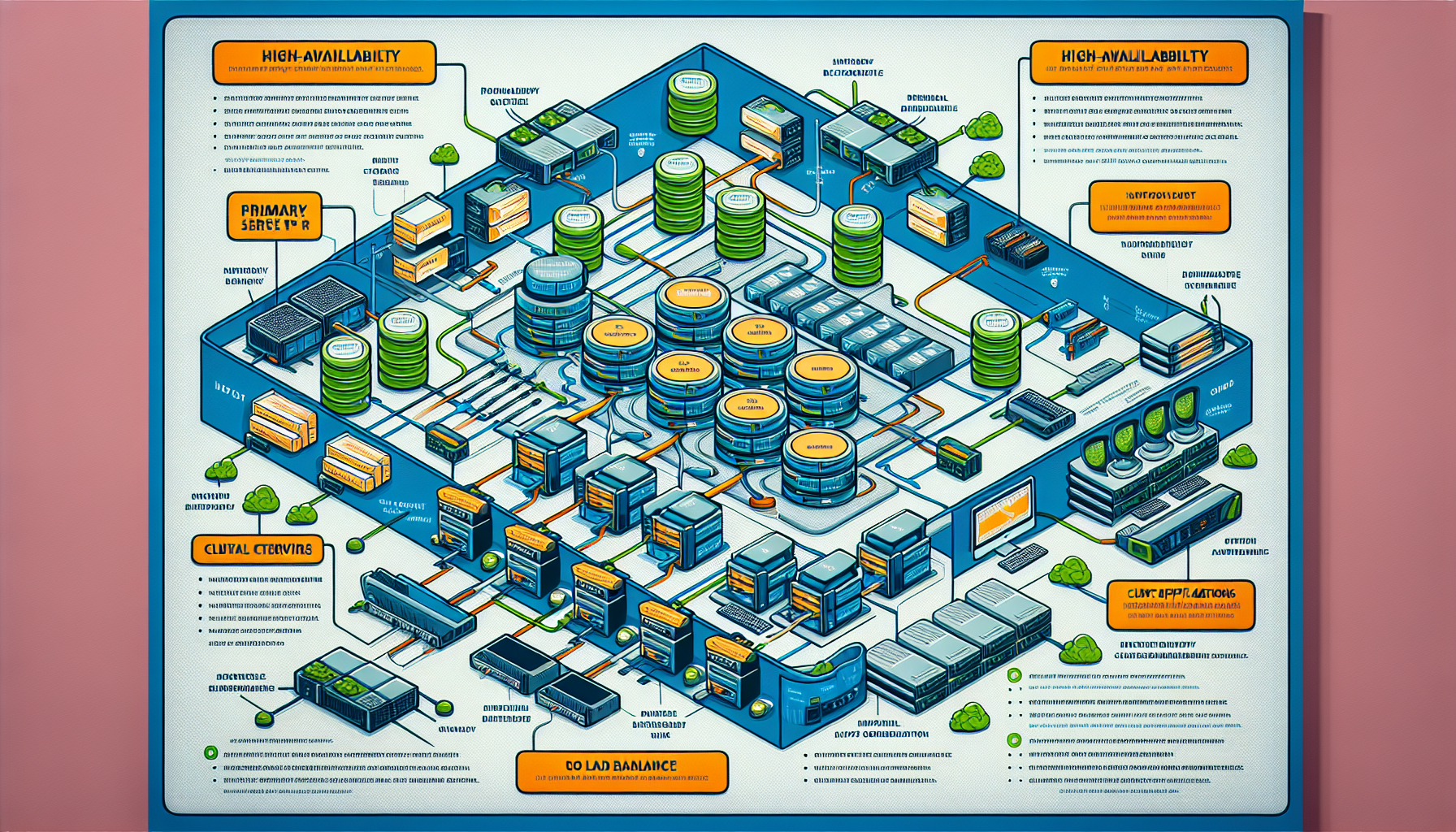

High-availability (HA) clusters ensure that critical database systems remain accessible even during hardware failures, network interruptions, or planned maintenance. In enterprise environments, HA is essential for meeting SLAs, maintaining business continuity, and preventing costly downtime.

This guide details how to design, configure, and maintain a high-availability database cluster using best practices for modern IT infrastructures, including virtualization, Kubernetes, and cloud platforms.

1. Understand High-Availability Principles

Before configuration, ensure clarity on the core HA concepts:

- Redundancy: Multiple nodes to handle failover.

- Failover Mechanism: Automated switch to standby nodes when the primary fails.

- Replication: Real-time or near-real-time data synchronization.

- Quorum & Fencing: Mechanisms to prevent split-brain scenarios in clustered databases.

2. Choose the Right HA Architecture

Select the HA method based on your database type:

- Active-Passive: One primary node, one or more standby nodes (common for PostgreSQL and MySQL).

- Active-Active: All nodes handle queries simultaneously (common for distributed databases like Galera Cluster or CockroachDB).

- Shared Storage: Cluster nodes access the same storage via SAN/NAS.

- Replication-Based: Nodes maintain their own storage, synchronized via database replication.

3. Prepare the Infrastructure

Step 3.1 – Hardware and Network

- Use at least two physical or virtual nodes.

- Redundant NICs and switches to avoid single points of failure.

- Low-latency, high-bandwidth interconnects (preferably 10GbE or faster).

Step 3.2 – Storage

- For shared storage: Implement multipath I/O for redundancy.

- For replication-based clusters: Ensure SSD/NVMe for optimal I/O performance.

4. Install and Configure Cluster Management Software

Cluster managers handle node membership, failover, and fencing.

Popular choices:

– Pacemaker + Corosync (Linux-based HA clustering)

– Kubernetes StatefulSets + Operators (Cloud-native HA)

– Windows Server Failover Clustering (WSFC for SQL Server)

Example: Pacemaker + Corosync for PostgreSQL

Install required packages

sudo apt update sudo apt install pacemaker corosync pcs fence-agents-all

Enable and start services

sudo systemctl enable pcsd sudo systemctl start pcsd

Authenticate cluster nodes

sudo pcs cluster auth node1.example.com node2.example.com -u hacluster -p StrongPassword

Create and start the cluster

sudo pcs cluster setup --name pgsql_cluster node1.example.com node2.example.com sudo pcs cluster start --all sudo pcs cluster enable --all

5. Configure Database Replication

PostgreSQL Streaming Replication Example

On Primary Node:

Enable replication in postgresql.conf

echo "wal_level = replica" >> /etc/postgresql/15/main/postgresql.conf echo "max_wal_senders = 5" >> /etc/postgresql/15/main/postgresql.conf echo "hot_standby = on" >> /etc/postgresql/15/main/postgresql.conf

Allow replication in pg_hba.conf

echo "host replication replicator 192.168.10.0/24 md5" >> /etc/postgresql/15/main/pg_hba.conf

Restart PostgreSQL

systemctl restart postgresql

On Standby Node:

systemctl stop postgresql

rm -rf /var/lib/postgresql/15/main/*

pg_basebackup -h primary-db.example.com -U replicator -D /var/lib/postgresql/15/main -P -R

systemctl start postgresql

6. Implement Fencing & Quorum

- Fencing: Prevents failed nodes from writing to storage after a failure. Use IPMI, iLO, or DRAC for hardware-level fencing.

- Quorum: Ensures cluster decisions are made only when a majority of nodes agree, avoiding split-brain scenarios.

Example: Configure quorum and fencing in Pacemaker

sudo pcs property set no-quorum-policy=stop sudo pcs stonith create fence_ipmilan fence_ipmilan pcmk_host_list="node1.example.com node2.example.com" ipaddr="192.168.10.100" login="admin" passwd="StrongPass" lanplus="true"

7. Test Failover Scenarios

Simulate failures to ensure HA works as expected:

Simulate node failure

sudo pcs cluster stop node1.example.com

Check cluster status

sudo pcs status

Validate:

– Standby node promotion.

– No data loss.

– Application reconnects automatically.

8. Monitor and Maintain

- Monitoring Tools: Use Prometheus + Grafana or Zabbix to track node health, replication lag, and failover events.

- Regular Backups: HA is not a substitute for backups—implement a robust backup schedule.

- Patch Management: Keep OS, database, and cluster software updated.

9. Kubernetes-Based HA for Cloud-Native Databases

For cloud-native deployments, use StatefulSets with Operators (e.g., CrunchyData PostgreSQL Operator, Percona MySQL Operator).

Example PostgreSQL Operator Deployment (Kubernetes YAML)

yaml

apiVersion: postgres-operator.crunchydata.com/v1beta1

kind: PostgresCluster

metadata:

name: ha-postgres

spec:

instances:

- name: primary

replicas: 2

backups:

pgbackrest:

repos:

- name: repo1

volume:

size: 50Gi

monitoring:

pgmonitor:

enabled: true

Best Practices Checklist

- ✅ Always test failover before production rollout.

- ✅ Implement fencing to avoid split-brain.

- ✅ Monitor replication lag to avoid stale reads.

- ✅ Use synchronous replication where zero data loss is required.

- ✅ Document recovery procedures for rapid incident response.

By following these steps, you can deploy a robust high-availability database cluster that minimizes downtime, ensures data integrity, and meets enterprise-grade reliability standards. This approach works whether you’re running bare-metal servers, VMs, or Kubernetes-native workloads.

Ali YAZICI is a Senior IT Infrastructure Manager with 15+ years of enterprise experience. While a recognized expert in datacenter architecture, multi-cloud environments, storage, and advanced data protection and Commvault automation , his current focus is on next-generation datacenter technologies, including NVIDIA GPU architecture, high-performance server virtualization, and implementing AI-driven tools. He shares his practical, hands-on experience and combination of his personal field notes and “Expert-Driven AI.” he use AI tools as an assistant to structure drafts, which he then heavily edit, fact-check, and infuse with my own practical experience, original screenshots , and “in-the-trenches” insights that only a human expert can provide.

If you found this content valuable, [support this ad-free work with a coffee]. Connect with him on [LinkedIn].

This post is jam-packed with valuable information and I appreciate how well-organized and easy to follow it is Great job!

Your passion for what you do is evident in every post It’s inspiring to see someone truly fulfilling their purpose and making a positive impact